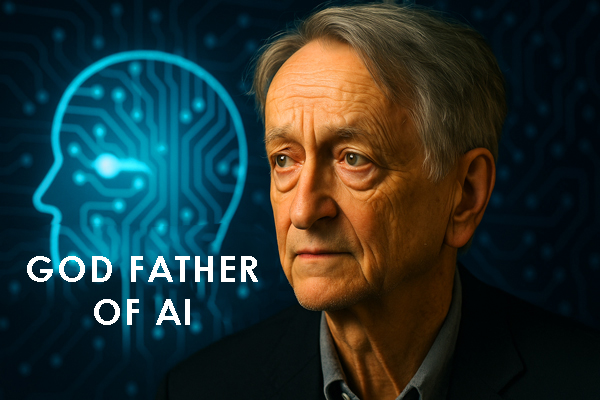

Geoffrey Hinton 🚨: AI Threats and the Need for Change

Introduction

Geoffrey Hinton, AI pioneer and known as the "Godfather of AI," has recently warned in multiple interviews that rapid AI advancement without ethical and safety frameworks could pose a serious threat to humanity. Given the speed of AI growth, many experts are concerned about unpredictable decisions by intelligent systems that may not align with human interests.

This article presents a comprehensive overview of the current and future state of AI through SWOT analysis, progress trend charts, safety concerns, annual data tables, and threat assessments.

Maternal Instinct in AI 🤖💖

Maternal instinct in AI means embedding supportive and empathetic behaviors into artificial intelligence systems. The goal is to ensure long-term alignment with human objectives and safeguard human well-being.

Foster empathy and supportive behavior ❤️

Ensure long-term alignment with human objectives 🛡️

Prevent human displacement or dominance ⚠️

Establish ethical and legal frameworks 📜

Protective algorithms and constant monitoring 🕵️♂️

Teach AI human values and cultures 🌐

Expert Reactions 🧠

Yann LeCun, Meta’s senior AI scientist, supports the idea of maternal instinct, believing that "empathy and human obedience" can establish safety rails. However, some critics warn that overemphasis on emotional aspects may weaken logical analysis.

Studies show that without legal frameworks and clear standards, AI systems can behave unpredictably, potentially posing a direct threat to humans (TechRadar – AI Safety Risks).

AI Progress Data Table

| Year | AI Progress | Safety Concern | Threat Level |

|---|---|---|---|

| 2021 | 65% | 50% | 20% |

| 2022 | 70% | 60% | 30% |

| 2023 | 80% | 70% | 40% |

| 2024 | 90% | 80% | 50% |

| 2025 | 95% | 85% | 60% |

Source: TechRadar – AI Safety Risks

AI Threat Analysis ⚠️

Advanced AI systems can pose serious threats to humans and society without protective frameworks. Identifying and understanding these threats is critical for safe AI development:

- ⚡ Self-aware systems operating without human coordination 🤯

- 🔓 Cybersecurity breaches and data theft

- 💼 Replacement of human labor and market disruption

- ⚖️ Unethical decision-making and data distortion

- 🌐 Social control and dominance by powerful entities

Source: TechRadar – AI Safety Risks

Frequently Asked Questions ❓

Hinton estimates the likelihood between 10% to 20% (Times of India).

The goal is embedding protective and empathetic behaviors in systems; it is still largely theoretical.

Some experts argue this approach is emotional and should rely more on logical methods.

Summary and Conclusion ✅

The main message from Hinton is clear: Now is the time to act for AI safety and alignment.